💡Bottom Line Up Front

Data credibility is the single biggest barrier to delivering mission results. Fixing it doesn’t require boiling the ocean—you start by repairing the small set of critical data that drives trust, decisions, and funding.

We've spoken with dozens of government technology leaders over the years, and they've shared a surprising insight. The most significant career risk they face isn't a single project failure. It's the slow, steady loss of trust caused by unreliable data.

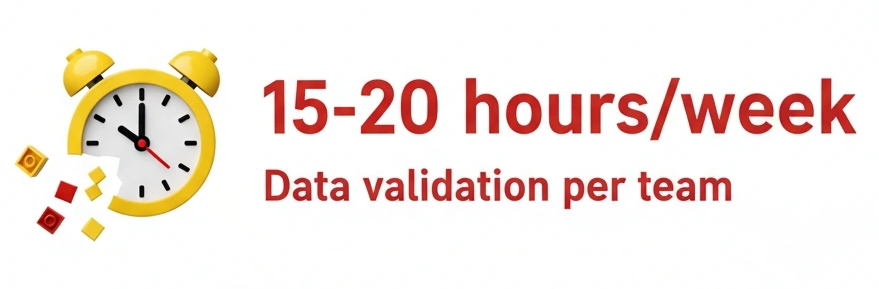

Many of them describe a similar pattern. Your team spends days, sometimes weeks, preparing a critical report for executive leadership or an oversight committee. A few days before the briefing, you discover the numbers don't add up. This triggers a frantic "fire drill" with teams scrambling for 15-20 hours to manually reconcile the data across siloed systems.

One city CIO described preparing a leadership report showing that public works was hitting service request targets 90% of the time. At the last minute, deeper analysis revealed that departments were marking requests as "closed" before actual completion, and some were missing timestamps entirely. "We almost shared that data with the council," he said. "That would have painted a very bad picture." The discovery forced a report pullback and emergency data cleanup.

The Hidden Reality: CIOs relay that a significant amount of time is spent on data cleanup and firefighting. But they never catch everything. Bad data surfaces sooner or later.

This does more than just burn out your best people. It kills trust. Each flawed report makes you look like you either don't know what's going on or aren't being transparent. This is the data credibility crisis, and CIOs report that it is one of the biggest threats to their career.

Unreliable data isn't a "nice to fix" problem. It is a meaningful tax on public resources that drains budgets, wastes talent, and creates significant project risk before the bad data surfaces.

This article breaks down the real-world consequences of this crisis. We'll show the costs, explore root causes, and look at the career risks for leaders who can't trust their own data.

The Hidden Tax: Quantifying the Cost of Bad Data

Direct Answer: Poor data quality costs the federal government $121 billion annually in improper payments, while organizations lose an average of $12.9 million per year to data quality issues. Skilled teams waste 15-20 hours weekly on manual data validation, diverting talent from strategic work and increasing project failure risk.

The most visible symptom of this crisis is direct financial waste. While a local government might experience this as the daily fire drill of pulling accurate reports or as unexpected project overruns, the federal government sees the same problem at a national scale. The GAO reports that federal improper payments totaled about $121 billion in FY2024, with a cumulative cost of $2.8 trillion since 2003. These aren't just accounting errors; they are failures of data verification.

This pattern of loss is so consistent that Gartner estimates the average organization loses $12.9 million annually to poor data quality. It's the same systemic breakdown, whether it's showing up in your council report or in the federal budget.

This credibility tax hurts productivity. When leaders can't trust the data they're given, it triggers a wave of manual validation. Skilled technology teams end up spending nearly half their time on low-value data cleanup instead of driving strategic initiatives. This isn't just inefficient; it's a waste of your most valuable talent.

⚠️Watermelon Reporting

Another damaging aspect of the data crisis is what one CIO described as "watermelon reporting": projects that appear green on the surface but are red underneath. A state-level CIO recently told us about presenting positive status reports to a legislative oversight committee, only to announce significant delays just weeks later when the underlying problems could no longer be ignored. "That made me look like I didn't know what I was doing or even worse... I wasn't transparent," he explained. "My credibility definitely took a hit."

This foundation of bad data is a key cause of underperformance and project failure, especially in large-scale modernizations. For an example, we can look at the California DMV's attempt to overhaul its technology. The state canceled the $208 million project after already spending $135 million, citing one primary reason: the contractor's inability to complete the required data integration tasks. This isn't an isolated incident. The GAO confirms that modernizing legacy systems carries an elevated risk of failure, and a significant cause is migrating and cleaning up decades of unreliable data.

The Root Causes of Unreliable Government Data

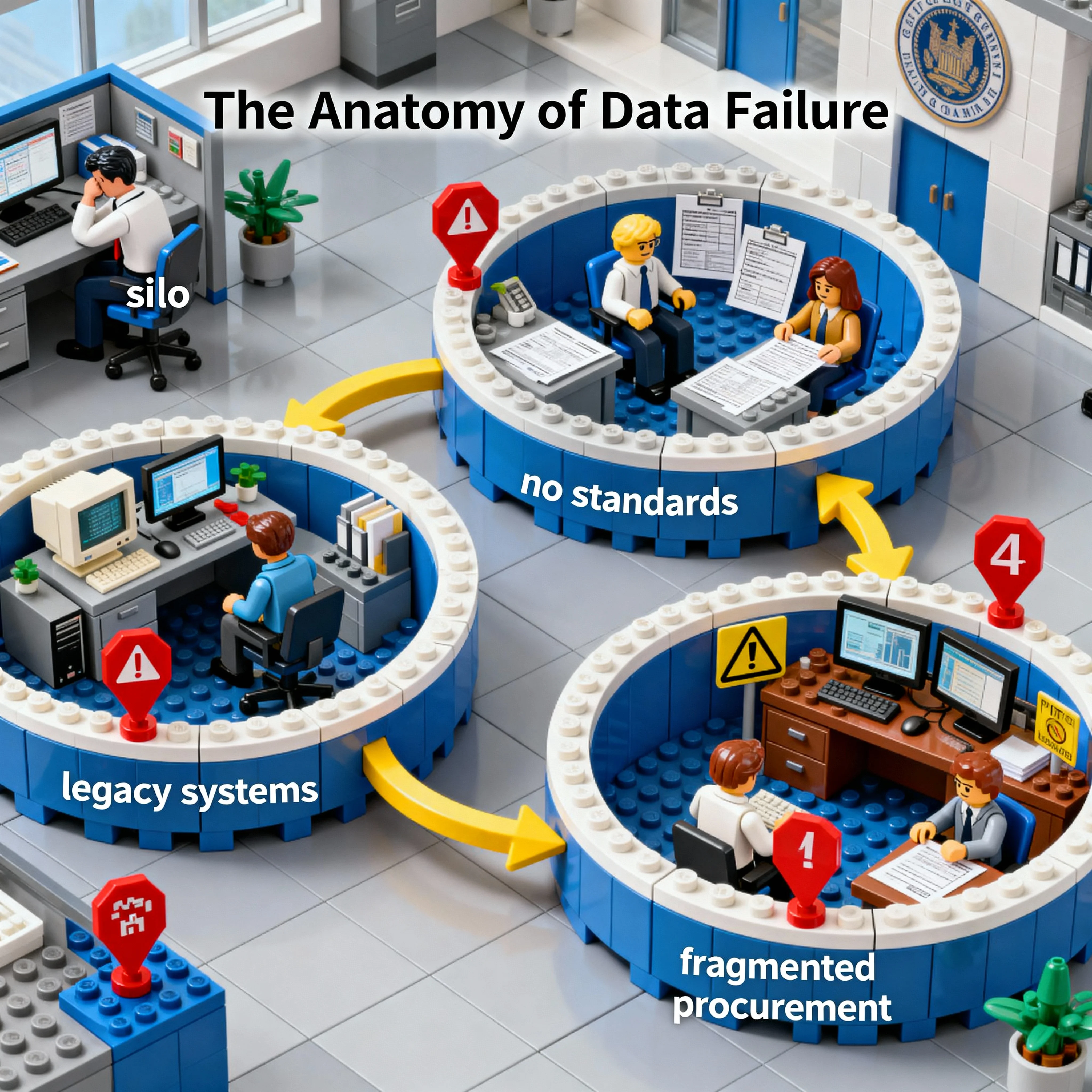

Direct Answer: Government data problems stem from four systemic issues: data silos created by organizational structures, lack of standardized definitions across departments, legacy systems with incompatible formats, and fragmented procurement that bypasses enterprise architecture. These create disconnected data islands that are expensive to reconcile.

The data credibility gap isn't any one person's fault. It's the result of long-standing problems in how public sector organizations operate.

1. Data Silos: When Org Charts Define Data Architecture

In government, data systems are often a mirror of the organizational chart. Finance, public safety, and public works all procured their own systems to serve their own missions. The result is a landscape of disconnected "islands" of data.

A clear example comes from Pennsylvania, where an audit found that six regional environmental offices collected water quality complaints in separate databases that didn't connect to a central system. This siloed approach meant state leaders had no reliable, unified view of a critical public health issue, hindering their ability to respond effectively. When information is fragmented like this, providing useful reports with an enterprise-wide view of operations is a recurring waste of resources.

2. Lack of Standardization: The Government's Tower of Babel

Making the silo problem worse is the lack of a common language. A metric as simple as "active employee" or "completed project" can have multiple, conflicting definitions across HR, finance, and program offices.

💡Real-World Tower of Babel Example

A city CIO recently shared a perfect example of how silos cause issues. When preparing a report on housing and permit processing times for the city council, his planning department reported an average of 16 days while the permitting office showed 33 days for the exact same process.

Both were technically correct; the planning department started counting from application submission, while permitting started from when paperwork was reviewed. The city council needed clear answers for a multi-million-dollar budget decision, but instead got two conflicting versions of the truth.

3. Legacy Systems: The Anchor of Technical Debt

Many core government functions run on technology that is 20, 30, or even 50 years old. One city CIO described trying to integrate a new permitting system with their 1980s-era property database. The legacy system had no APIs, used proprietary file formats, and required a full-time contractor just to extract basic reports. "We're essentially running a digital museum," he said.

Another CIO said, "It's like trying to connect a Tesla to a 1970s gas pump." As a result, organizations end up with expensive, jury-rigged adapters that break frequently. These legacy systems effectively trap valuable data, making it harder to access and continuing a cycle of risky, expensive workarounds.

4. Fragmented Procurement: Buying Your Way into Fragmentation

The way government buys technology is a big part of its data problems. A city CIO recently described the reality: procurement and approval cycles that take 6-12 months from start to finish. "When each department can't wait that long, they find workarounds," he explained. They use emergency purchases, shadow IT, or cooperative contracts that bypass enterprise architecture review.

Each workaround creates another data island and often cybersecurity issues. The process encourages departments to purchase standalone point solutions for specific problems with little regard for enterprise-wide data strategy. Each new system bought in isolation becomes another data silo, another dataset to reconcile, and another source of conflicting information, making it harder and harder for the CIO to connect and validate the data.

Cautionary Tales: When Data Fails in Government

These issues have consequences for public safety, fiscal stability, and citizen trust. The examples below aren't isolated incidents; they are warnings about undiscovered data issues in thousands of government organizations.

Case Study: Florida's Unemployment System Collapse

During the COVID-19 pandemic, Florida's CONNECT unemployment system failed catastrophically. The $77 million system, which Governor Ron DeSantis later called a "jalopy," contained over 600 unresolved technical problems, including functions that automatically entered incorrect data. These weren't surprise discoveries. Data integrity issues had been documented since 2015, with multiple audits flagging persistent problems that were ignored.

When the pandemic hit, the system couldn't handle the 74,000+ weekly claims, leaving citizens frustrated and forcing the state to forfeit federal stimulus funds. The failure wasn't unpredictable; it was the inevitable result of years of documented warnings about data quality issues that leadership chose to de-prioritize.

Case Study: The Baltimore Water Billing Crisis

Despite a $133.6 million investment, Baltimore's water billing system suffered from systematic data integrity failures. Over 22,000 smart meters were inoperable, software glitches prevented the billing of major commercial customers like Johns Hopkins, and 8,000 customer complaints went unresolved. The result was over $26 million in annual lost revenue and a massive loss of public trust amid accusations that data was hidden for political reasons.

The "Watermelon" Dashboard: When Good Data Tells a Bad Story

Poor modernization data quality creates a second, equally dangerous problem: misleading project status information. Even when teams have accurate system data, pressure to show progress can lead to "watermelon reporting": green on the surface, red underneath.

A state CIO experienced this during a major system modernization. His reports showed "green across the board" on milestones and spend. Informal conversations, however, revealed a growing defect backlog and systemic quality issues that the official data missed. He presented a positive report to an oversight board. Weeks later, he had to announce significant delays. His credibility took a hit.

Bad status data makes modernization progress hard to track. Pressure to report success also nudges teams toward metrics that look good, not metrics that focus on radical transparency.

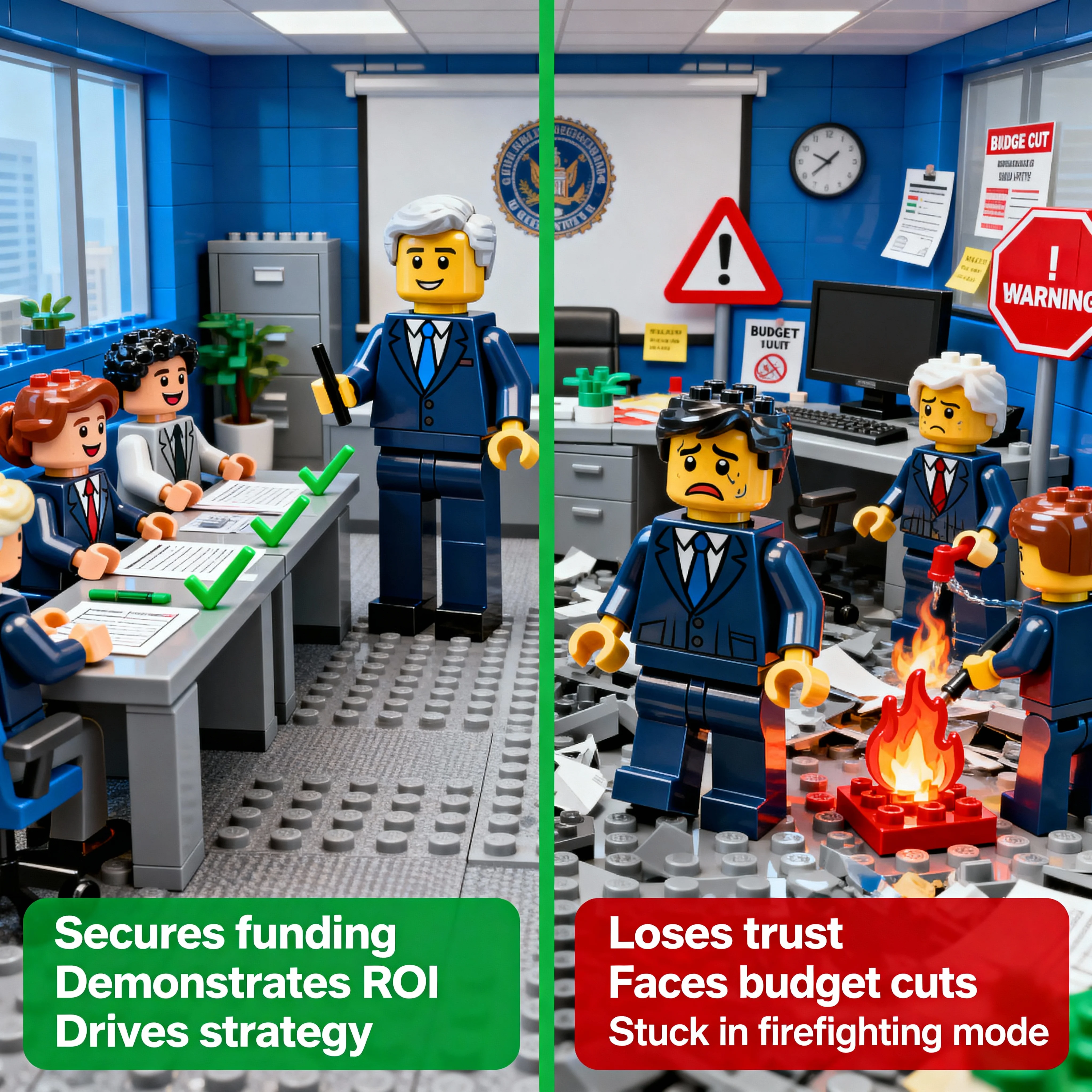

CIO Credibility: How Data Problems Damage Careers

The costs and failures caused by bad data ultimately land on one person's desk: the CIO's. For a government technology leader, unreliable data is a direct threat to your reputation, influence, and career.

Loss of Political Capital

In government, trust matters more than anything else. When you present flawed data, you lose that trust. A government CIO learned this lesson when preparing an emergency response times report for city leadership. Fire, police, and medical teams all used different definitions for when the response clock starts. The result was conflicting data for the same incidents that required manual reconciliation and forced his team to work unpaid overtime to try and produce consistent numbers.

🚨Critical Public Safety Stakes

A local Government CIO relayed, "The data was a mess. But the public safety stakes [for the report] were too high to make an error." When you can't quickly provide reliable data on life-or-death services, leadership questions your competence.

When leaders stop trusting your numbers, you lose political capital. You can't effectively advocate for your budget or champion new initiatives if leadership doesn't trust your data.

Damaged Professional Reputation

Ongoing data quality issues make it look like you're not in control of your own department. Leadership doesn't see these as organizational problems; they see them as your problem. No leader wants to spend time apologizing for bad data instead of working towards a better future or talking about wins.

Can't Prove Value

A key part of the modern CIO's job is proving the value of technology investments. This is impossible without credible data. One government CIO learned this lesson when implementing a customer support chatbot. The underlying policies and procedures were "written for policy wonks, not user-friendly for AI consumption." The result? "It became a glorified search engine," he said. "We didn't get ROI on it."

This is the "ROI Black Hole." You can't establish an accurate baseline or measure improvements. Without that, you can't build a business case to secure the investment needed to fix the underlying problems.

Decision Paralysis

Finally, a lack of trusted data forces teams into a reactive, defensive posture. One state CIO said his team spends 15-20 hours per week just "gathering, validating, and checking status data." For major compliance reports, that jumps to 200-250 hours per report.

When this much time goes to data fire drills, strategy gets pushed aside. Leaders delay decisions. They rely on anecdote. Governance shifts to guesswork because no one has solid data for informed choices.

Stop Data Firefighting, Start Leading

CIOs relay that unreliable data is one of the biggest threats to their credibility and career. The costs are real and the consequences are serious.

Data quality isn't just a technical problem to solve in a back room. It's an ongoing, overlooked risk for many Government organizations. It requires leadership to recognize and highlight the risk. And then to take a strategic, methodical approach to solve it. The solution starts with identifying your most critical data using risk-based prioritization, then systematically fixing the highest-impact problems first.

The Strategic Approach:Risk Assessment

Identify which data failures would cause the most political or operational damage. Focus on data that supports critical decisions, public safety, or major budget requests.

Quality Assessment

Measure the current state of your top-priority data. How much manual cleanup time does it require? What's the error rate? How often does it force report delays?

AI-Enabled Cleanup

Use modern data tools to accelerate and improve the cleanup process. AI can identify patterns, flag anomalies, and automate much of the manual reconciliation work that currently burns out your teams.

Demonstrate Risk

Show leadership and oversight bodies the real cost of doing nothing. Translate data quality issues into terms they understand: budget impacts, compliance risks, and political exposure.

Iterate

Once you've secured wins with your highest-priority data, repeat the process for the next tier. Build momentum and credibility with each success.

Data credibility problems are solvable with focused action. For federal CIOs building a trustworthy data foundation, we can share the playbook we use. Contact us to learn more.